AI Governance 2024

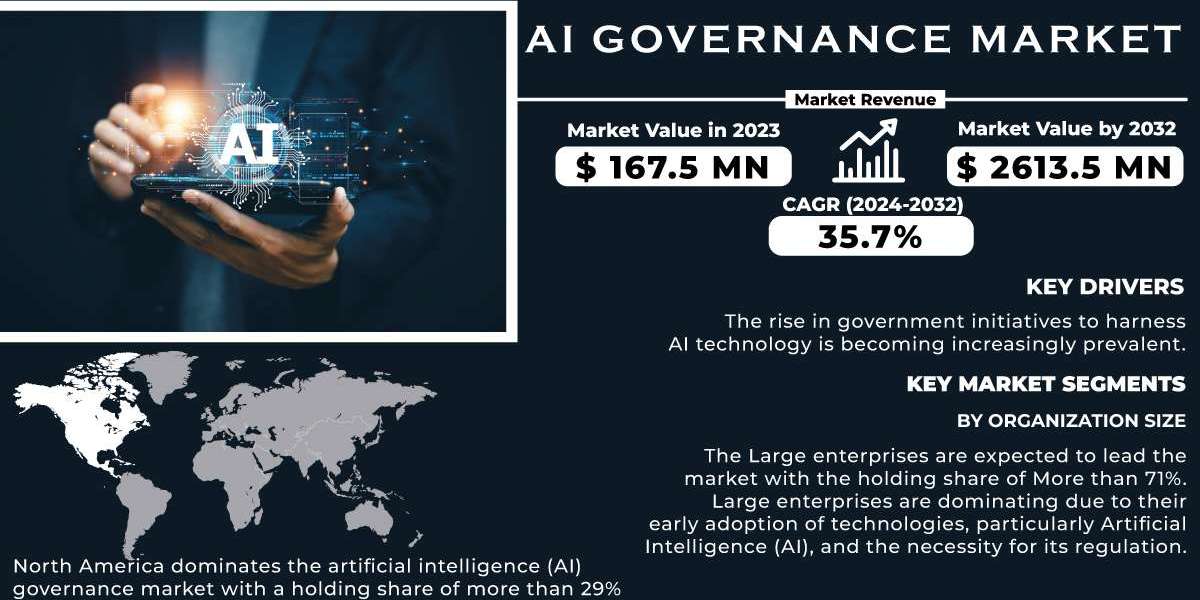

As artificial intelligence (AI) continues to permeate various aspects of society, the need for robust AI governance has become increasingly critical. AI governance refers to the frameworks, policies, and practices established to guide the ethical development and deployment of AI technologies. This encompasses issues such as accountability, transparency, fairness, and privacy, which are essential to ensuring that AI systems are aligned with societal values and norms. The significance of the AI Governance Market Share is evident as organizations recognize the necessity of structured governance to navigate the complexities and challenges posed by AI technologies. The AI Governance Market Size was valued at USD 167.5 million in 2023 and is expected to reach USD 2,613.5 million by 2032, growing at a remarkable CAGR of 35.7% over the forecast period from 2024 to 2032.

The rapid advancement of AI technologies has led to unprecedented capabilities in areas such as data analysis, natural language processing, and machine learning. While these developments promise significant benefits, they also raise concerns about the potential for bias, discrimination, and misuse. As organizations increasingly rely on AI for decision-making, the implications of these technologies on individuals and society demand a careful and responsible approach. Establishing effective AI governance is essential to mitigate risks and ensure that AI serves the public good.

Understanding AI Governance

AI governance encompasses a wide range of practices aimed at ensuring the responsible use of AI technologies. It includes the development of policies, guidelines, and standards that outline how AI should be designed, implemented, and monitored. A key component of AI governance is the establishment of accountability mechanisms, ensuring that individuals and organizations are held responsible for the outcomes of AI systems.

Transparency is another vital aspect of AI governance. Stakeholders must understand how AI systems operate, including the data used to train them and the algorithms that power them. This transparency fosters trust among users and allows for greater scrutiny of AI systems to identify and address potential biases or ethical concerns.

Moreover, AI governance seeks to ensure fairness in AI systems. This involves actively working to eliminate biases in AI algorithms and ensuring that AI technologies do not disproportionately impact certain groups of people. Fairness also encompasses the principle of equitable access to AI benefits, ensuring that the advantages of AI are distributed fairly across society.

Privacy and data protection are fundamental to effective AI governance. As AI systems often rely on vast amounts of data, it is crucial to implement measures that safeguard individuals' privacy rights. This includes adhering to data protection regulations and ensuring that data collection and processing practices are ethical and transparent.

The Role of Stakeholders in AI Governance

The successful implementation of AI governance requires collaboration among various stakeholders, including governments, organizations, researchers, and civil society. Governments play a crucial role in establishing regulatory frameworks and guidelines that govern AI use. By creating policies that promote ethical AI development and deployment, governments can help ensure that AI technologies align with societal values.

Organizations, particularly those developing and deploying AI technologies, must also take responsibility for governance. This includes implementing internal policies and practices that prioritize ethical considerations throughout the AI lifecycle. Organizations should conduct regular assessments of their AI systems to identify and mitigate potential risks, ensuring that their technologies are designed with ethics and accountability in mind.

Researchers contribute to AI governance by advancing our understanding of the ethical implications of AI technologies. Through interdisciplinary collaboration, researchers can provide insights into the social, ethical, and legal dimensions of AI, informing the development of effective governance frameworks. Furthermore, involving diverse perspectives in the research process can help identify and address biases and ensure that governance approaches are inclusive and equitable.

Civil society organizations also play a vital role in advocating for responsible AI governance. These organizations can raise awareness about potential risks and ethical concerns associated with AI technologies, advocating for policies that prioritize the public interest. Engaging with communities affected by AI technologies ensures that governance frameworks reflect diverse perspectives and address the needs of all stakeholders.

Challenges in Implementing AI Governance

While the need for AI governance is clear, implementing effective governance frameworks presents several challenges. One significant challenge is the rapid pace of AI development. The technology landscape is evolving so quickly that regulatory frameworks may struggle to keep pace with emerging innovations. This can create gaps in governance, leaving certain AI applications unregulated or inadequately addressed.

Another challenge is the complexity of AI systems themselves. Many AI algorithms, particularly those based on deep learning, function as "black boxes," making it difficult to understand how they arrive at specific decisions. This lack of transparency poses significant challenges for accountability and trust, as stakeholders may struggle to ascertain whether AI systems are operating fairly and ethically.

Moreover, achieving a consensus on ethical principles and governance standards can be challenging. Different stakeholders may have varying priorities and perspectives on what constitutes ethical AI use. Reconciling these differences requires ongoing dialogue and collaboration among stakeholders, which can be time-consuming and resource-intensive.

Data privacy concerns also complicate AI governance efforts. As AI systems often rely on vast amounts of personal data, balancing the need for data-driven insights with the protection of individual privacy rights is a complex challenge. Effective governance frameworks must navigate these competing interests while ensuring that individuals' rights are upheld.

Future Directions for AI Governance

Looking ahead, the future of AI governance will be shaped by ongoing developments in technology, society, and regulatory landscapes. As AI continues to evolve, governance frameworks must adapt to address emerging challenges and opportunities. One promising direction is the integration of AI ethics into the design and development process. By embedding ethical considerations into the AI lifecycle, organizations can proactively identify and mitigate potential risks before they arise.

Collaboration among stakeholders will be essential in shaping effective AI governance. Public-private partnerships, interdisciplinary research initiatives, and multi-stakeholder dialogues can facilitate the sharing of knowledge and best practices. Engaging with diverse communities will ensure that governance frameworks are inclusive and representative of societal needs.

Additionally, the role of international cooperation in AI governance cannot be overstated. Given the global nature of AI technologies, harmonizing governance standards across jurisdictions will be crucial in addressing challenges such as data privacy, bias, and accountability. International collaborations can foster shared understanding and facilitate the development of cohesive governance frameworks that transcend national boundaries.

Education and awareness-raising initiatives will also play a pivotal role in advancing AI governance. By educating stakeholders about the ethical implications of AI and the importance of governance, organizations can foster a culture of responsibility and accountability. Training programs that emphasize ethical AI practices will empower individuals and organizations to make informed decisions about AI development and deployment.

Conclusion

In conclusion, AI governance is essential to harnessing the transformative potential of artificial intelligence while mitigating its associated risks. As AI technologies continue to evolve, the need for effective governance frameworks becomes increasingly critical. By prioritizing accountability, transparency, fairness, and privacy, stakeholders can work together to establish a robust governance ecosystem that ensures AI serves the public good. The rapid growth of the AI Governance Market reflects the increasing recognition of the importance of these frameworks in navigating the complexities of AI. As organizations and governments embrace responsible AI governance, they can foster innovation that aligns with societal values, ultimately shaping a future where AI benefits everyone.

Contact Us:

Akash Anand – Head of Business Development & Strategy

Phone: +1-415-230-0044 (US) | +91-7798602273 (IND)

About Us

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Read Our Other Reports:

Game-Based Learning Market Share